We all realize the power of Disruptive Innovation as it tumbles influential firms and fuels the rise of embryonic Startups into mega-corporations. For example, the personal computer has caused disruption to makers of minicomputers, typewriters, and many more. As a result, tiny Microsoft and Intel have grown as mega-success stories. Similarly, the smartphone has caused disruption to the PDA, digital camera, and music player innovators. Although we hail these disruptive innovations, at the core of them is the microprocessor. In the absence of the microprocessor invention, none of those innovations could have grown as a creative wave of destruction, causing disruptive innovation effects. But the invention was not sufficient enough. It is the microprocessor evolution that has been fueling disruptive innovations.

Like many other inventions, the microprocessor also emerged in primitive form, having very little or no disruptive innovation power. Its continued evolution has been unleashing its latent Creative Destruction capacity. Since its invention as an integrated circuit containing only 2300 transistors, the microprocessor has been evolving. They have an increasing number of transistors, clock speed, and many other features. For example, the Intel Core i7 processor powering many of today’s laptop computers has 774 million transistors. On the other hand, Apple’s bionic chip powering the iPhone has 15 billion transistors.

Defining microprocessor:

A microprocessor is a silicon or semiconductor chip in charge of fetching instructions and data, executing computing instructions like adding or subtracting, comparing numbers, and controlling the flow. It contains numerous integrated discrete components such as transistors, resistors, and capacitors—forming digital logic building blocks such as AND, OR, XOR, and NOT gates. They are used to make arithmetic, logic, and control circuitry required to perform the functions of a computer’s central processing unit. At each clock pulse, instructions and data are fetched, processed, and moved, both within and outside of it.

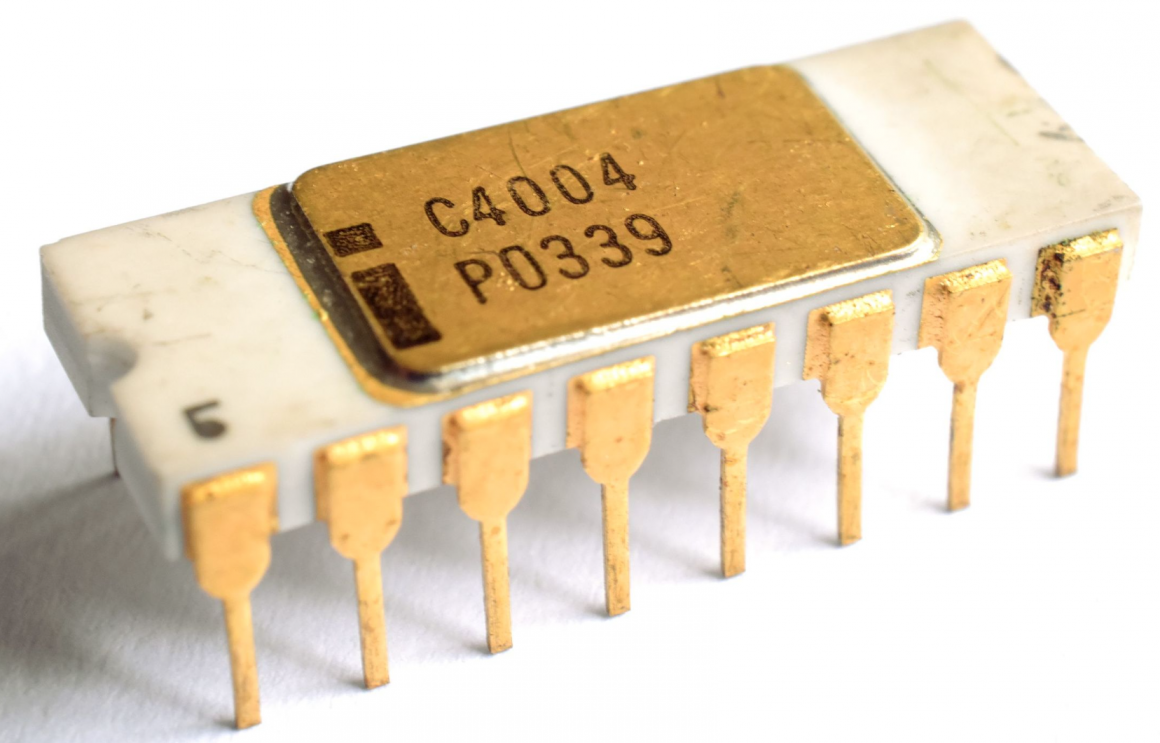

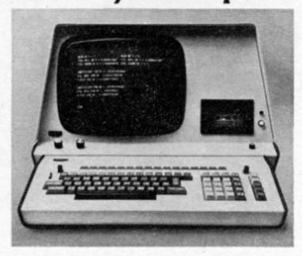

Microprocessor started the journey as Intel 4004 4-bit processor. The application of microprocessors began with the innovation of Busicom 141-PF Desktop Calculator (1971), Intel SIM-4 Development System (1972), and Wang 1222-word processor (1975), among others. For sure, these innovations were not creative destruction forces with disruptive innovation effects.

Generations of Microprocessors:

Microprocessor evolution is marked by several factors such as the number of transistors, instruction set type such as complex and reduced, clock speed, functionality, the width of instruction and data bus, and silicon technology node. A snapshot of microprocessor evolution is shown as following eight generations. However, the objective of this write-up is to shed light on the role of the evolution of microprocessors in powering disruptive innovations.

- First Generation: The emergence of 4-bit Intel 4004 and 8-bit Intel 8080 during 1971-1972 defines the first generation.

- Second Generation: From 1973 to 1978, second-generation microprocessors like 8-bit like INTEL 8085 Motorola 6800 and 6801, etc came into existence.

- Third Generation: They belong to 16-bit microprocessors, emerging in 1979 and 1980. Some of the examples are INTEL 8086/80186/80286 Motorola 68000 68010 etc.

- Fourth Generation: From 1981-to 1995, 32-bit fourth-generation microprocessors emerged defining the fourth generation. Popular examples are INTEL 80386 and Motorola 68020.

- Fifth Generation: This is the era of 64-bit microprocessors, marked by Intel Pentium. Celeron, dual, quad, and octa-core processors came into existence.

- Sixth Generation: Microprocessors having a graphic processing unit (GPU) represents the sixth generation, led by NVIDIA.

- Seventh Generation: System on Chip (SoC) having CPU, GPU, and other functional units like wireless communication for smartphones define the seventh generation. Examples include Apple A-series chips, leading to the bionic one. It also includes FGPA enabled mission-centric customized CPU.

- Eighth Generation: Recently a generation of microprocessors having Intelligence Processing Units (IPU) have started to emerge. They are targeted for the applications of machine learning for building intelligent machines like autonomous vehicles.

Microprocessor invention:

The journey of microprocessor invention began in 1969, with the hiring of Intel by a Japanese calculator manufacturer called Busicom. The job of Intel was to create chips for a calculator that Busicom designed, which led to chipsets consisting of four integrated circuits (ICs). As part of this assignment, Intel developed and commercialized the world’s first single-chip microprocessor, the Intel 4004. This single-chip was able to play the role of the central processing unit (CPU), which combines arithmetic and control logic functions.

Source:

https://en.wikipedia.org/wiki/Intel_4004

Subsequently, the Microprocessor invention emerged as Intel 4004 chip in 1971 at a price tag of $60 apiece. At the root of it is the invention of a technique of producing multiple transistors on a single silicon chip as an integrated circuit. At Fairchild semiconductor in 1959, Robert Noyce invented the technique of creating the first monolithic integrated circuit chip. On the way to the invention of the microprocessor, it was the second major invention after the Transistor. As the production of multiple transistors on a single chip reduces the cost and increases the quality, the race of producing silicon chips with a growing number of transistors started.

Hence, all electronic and computer product makers got into the iterative redesign of their products to reduce discrete component count—leading to a higher density of integrated circuits. Furthermore, the underlying process technologies, like photolithography, kept improving, supporting the transistor production of smaller dimensions—leading to growing chip density.

A multinational design team deserves the credit for microprocessor invention:

The underlying force of the microprocessor invention was to reduce discrete components to make Busicom calculators. The credit for invention goes to a team of four engineers from three countries. They are Federico Faggin (Italian), Marcian Hoff and Stanley Mazor (Americans), and Masatoshi Shima (Japanese). Intel 4004 had only 2,300 transistors, far less than the 774 million transistors of the Intel Core i7 processor. On the other hand, for the Intel Core i9 processor, the eight-core 11th Gen die measures around 270mm² and consists of 6 billion transistors.

Intel’s microprocessor evolution to fuel personal computer disruptive innovation:

For sure Wang 1222-word processor machine innovated out of Intel 4004 did not cause disruption to typewriters. That does not necessarily mean that Busicom 141-PF Desktop Calculator did not grow as a creative destruction force to the use of accounting clerks to crunch numbers with brain, pencil, and paper. However, Intel kept taking advantage of processing technologies to store more transistors, increase the clock speed, and expand the instruction set and data width. Subsequently, Intel released 8080, 8-bit 8085, 16-bit 8086, and 8088 microprocessors. But Intel was not alone in developing and evolving microprocessors. Like Intel, Motorola came up with 6800 Microprocessors in 1974, followed by 68000 in 1979. Among others, Zilog came up with the Z80 series of 8-bit microprocessors compatible with the Intel 8080.

The turning point of microprocessor evolution to fuel disruptive innovation started with IBM’s decision to design a personal computer with Intel 8086 microprocessor. In comparison to 4004’s 2300, it had 29000 transistors. Instead of the 4-bit instruction set of 4004, 8086 had a 16-bit instruction set. To fuel the demand for major applications like a word processor and spreadsheet, Intel started releasing successive better versions, leading to the releases of 8088, 80186, and 80286. The up-gradation of the text-based user interface of Microsoft DOS to the graphical user interface of Windows demanded further power of the processing unit. Hence, Intel evolved its microprocessors to higher levels like 80486 and Pentium. As a result, applications running on PC became a creative destruction force to typewriters, disrupting typewriter makers.

Client-server computing out of PC led to causing disruption to minicomputer makers:

Furthermore, growing power and connectivity led to supporting business applications running on client-server environments. As a result, the personal computer became a disruptive force to Minicomputer makers. Hence, consequentially, high-performing companies like DEC, NCR, and Honeywell suffered from the disruptive effects of PC innovation out of microprocessor evolution.

Motorola’s Microprocessor evolution fueled Apple’s computer as disruptive innovation

Unlike IBM, Apple chose Motorola’s microprocessor. Using an 8-bit 6502 microprocessor, Apple released Apple I computer in 1976. Of course, this computer was far from being a disruptive innovation. The inflection point emerged with the release of the graphical user interface (GUI)-based Macintosh. Apple used Motorola 68000 16-bit microprocessor, running at 8-kHz speed, to power Macintosh. But the processor was not capable enough to unleash the potential of GUI. Hence, Motorola’s processor evolved to Motorola 68020, running at 16 MHz speed. As a result, Apple Macintosh grew as a disruptive innovation to text interface-based computing and publishing and composing machines.

Microprocessor evolution is at the core of the rise of smartphone disruptive innovation force

In the beginning, mobile handsets were electronic devices. However, a significant change in mobile handset design emerged with the emergence of the iPhone in 2007. At the core of iPhone design is a multi-touch-based user interface. To power it, Apple turned electronic gadgets into powerful computers, powered by A series microprocessor—system on chip (SOC). In addition to ARM-based processing cores (CPU), it also comprises a graphics processing unit (GPU), cache memory, and other electronics for mobile computing functions within a single physical package. To keep unleashing the latent potential through sustaining innovation of iPhone design, Apple kept evolving its A series SOC leading to the A15 Bionic chip having 15 billion transistors, running at 3.23 GHz. Some other processor makers for smartphones are QUALCOMM, Samsung, and HiSilicon.

Natural progression leading to invention and evolution of microprocessor—powering disruptive innovation

The invention of the microprocessor is the outcome of the natural progression of higher-level integration of discrete devices. The advancement of processing technologies and electronic design automation tools kept widening the door to making transistor feature dimensions smaller. Over the 50 years, the feature dimension of 4 micrometers in making Intel 4004 chip has come down to 5nm in producing Apple’s latest A-series chip (as of 2020). Hence, chip designers kept taking advantage of it, leading to having core computational functional units on a single chip, giving birth to the invention of Intel 4004. However, at the birth, this invention was not powerful to fuel creative destruction leading to disruptive innovation. Instead, subsequent microprocessor evolution led to fueling disruptive innovations.

For sure, the adoption of the microprocessor for the design of IBM PC and Apple Computer created the inflection point. But in the absence of the continued progression of processing technologies, microprocessor evolution could not have taken place. As a result, it could have failed to keep nurturing those creative forces to unleash the disruptive innovation effect.

Similarly, Apple’s decision to reinvent the mobile handset as the iPhone created a new wave of creative destruction. Like the PC wave, microprocessor evolution has been a vital force to power it as disruptive innovation. Furthermore, although the PC wave driving microprocessor evolution made Intel a global success story, its failure to tap into the rise of smartphones fueling the next wave of evolution has blunted Intel’s edge. On the other hand, TSMC has leveraged it to grow as a global leader in silicon processing.

What could be the next innovation wave demanding further evolution of microprocessor evolution is intriguing indeed. Could the Intelligent Processing Unit (IPU) for powering autonomous vehicles create the next wave of microprocessor evolution?

...welcome to join us. We are on a mission to develop an enlightened community by sharing the insights of Wealth creation out of technology possibilities as reoccuring patters. If you like the article, you may encourage us by sharing it through social media to enlighten others.