Every industrial revolution requires a set of core technologies. These technologies power innovations to cause Creative Destruction to major industries as well as firms. For unfolding the Fourth Industrial Revolution, robotics is one of the core technologies indeed. However, robotics is a collection of Disruptive technologies. The progression of these technologies will keep strengthening the Innovation waves of robotics and automation. Consequently, technologies like sensing and machine learning will enable the development of artificially intelligent (ai) robots. As a result, even industrial robots are behaving as AI machines with the capability of dealing with variation. Instead of doing just repetitive, dull and dirty jobs, AI robots have been taking over quality inspection jobs as industrial inspection robots. Besides, the world of robots has already started witnessing AI robot cars driving in the outdoor environment. Due to this, mobile robots, medical robotics, intuitive robotic surgery, personal AI robots, and agricultural robots are emerging.

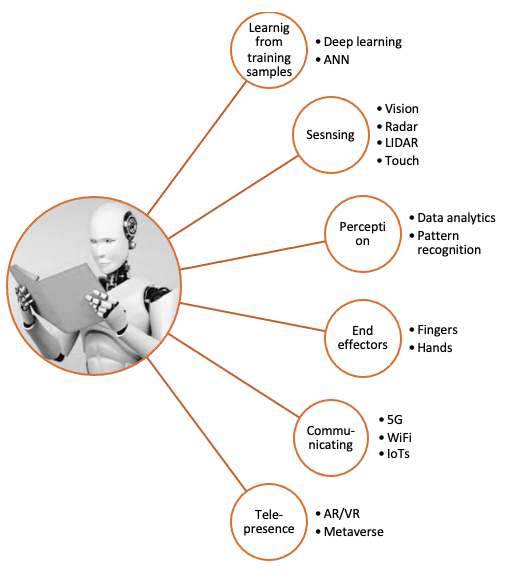

Technologies for robots encompass learning from training samples, sensing, perceiving, communicating, making decisions, taking actions, making evaluations of own acts, and learning from doing. Machine learning techniques like deep learning, Reinforcement Learning, and neural networks are vital for making AI robots. These technologies for robots are forming the basis of artificial intelligence and robotics engineering.

Envisioning the application of robots requires monitoring and prediction of these technologies. Moreover, the nature and timing of unfolding creative destructions due to robotics will also be influenced by the growth rate and maturity of the underlying technologies. Hence, it requires careful monitoring and management response to disruptive technologies powering robotics. A few of these robotic technologies are explained here. These are disruptive technologies as they have the potential to make robotic innovations cause disruptions.

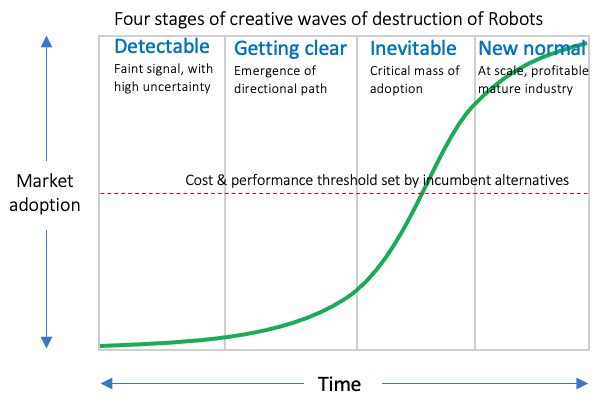

Evolution of Technologies of Robots for driving creative waves of destruction

Advancements of the technologies of robots have been fueling the rise of creative waves of destruction in different application areas. For example, industrial inspection robots have been becoming the new normal in manufacturing. Similarly, robot cars have been getting momentum to roll out. Such creative waves of destruction have been growing and unfolding in phases-forming an S curve like life cycle.

End effector, hand, and finger technologies for robots

In order to deploy robots to perform many tasks, robots should have a suitable end effector to grasp, lift, manipulate, and release objects. Of course, without causing the undesirable effect. For example, we may ask a household robot to pick an appropriately ripe mango from a basket. In certain cases, those end effectors will look like human hands, having multiple fingers. In fact, significant progress is needed to innovate solutions for delegating tasks to robots. The progress in making robots’ hands similar to ours will significantly contribute to improving the comparative advantage of robots over humans in performing numerous tasks, starting from stitching tissue to handling fabrics.

However, research is taking place in developing a robot hand with as high as 129 sensors and 24 joints, with very similar movements to those of humans. This envisioned hand will include the thumb or even the palm’s flexion to move the little finger. These are vital disruptive technologies for powering robotics to fuel Waves of Innovation. However, the progression of this technology alone will have a high implication on the application of robots affecting the future of work.

Sensing at the tip of the finger

Our fingertip looks very simple. Tactile information we collect from the fingertip contributes to the quality of grasping. To let robots perform some tasks, they need to have fingertips like ours. We are far from there. Research is underway to make progress. One of the techniques has been to use an optical-based fingertip sensor that measures both interaction forces and proximity between the fingertips and the environment. There is a need to have a combination of multiple sensing modalities at the tip of a finger. Research is also underway to make a fingertip sensor with resistive touch and temperature sensors.

In addition to touch, proximity, and temperature, we also use a fingertip to measure geometric information like the curvature of an object and physical information like contact force. Often discrete sensors are not suitable. For sensing the contact position and normal, the 3-D contact force, and the local radius of curvature (of the object in contact with the fingertip, a camera is installed beneath the fingertip made of silicone.

Robots skin

Despite many claims about robots’ power, so far, robots do not have human-like skins sensing like humans. The lack of feel and response to physical contact is limiting applications of robotics. In fact, this feature is essential to offer certain services that require them to come in increasingly close contact with people, like in elderly care or hospitality service delivery. Progress is being made to have 13,000 sensors. In comparison to human beings’ 5 million skin receptors, it’s primitive. Nevertheless, it is far better than having no sense of touch at all. Continued progress in this technology will realize touch enables safe robot operation. Subsequently, it will enable robots to detect contact with unseen obstacles and open the possibility to apply the correct force for achieving a task without damaging objects, people, and the robot itself.

Stereo vision technology for robots

Stereo vision-based depth-sensing technology is expanding the application of robots. Due to the depth perception technology, Robots are acquiring the capability to pick objects from the bin. Particularly, e-commerce companies require such applications of robots for packing objects in response to orders. However, it’s a very complex task for robots. The technological approach requires a point cloud map. To create a point cloud map comprising millions of data points of object space, progress based on stereo machine vision camera generating a 3D depth map is being reported. It appears that 3D-bin picking with machine vision capability will enable robots to take over increasing tasks from humans.

Optical flow technology for robots to cause creative destruction

In studying the visual stimulus provided to humans and other animals while moving through the world, American psychologist James J. Gibson developed the concept of optical flow. Relative motion between an observer and a scene causes the pattern of apparent motion of objects, surfaces, and edges in a visual scene. Researchers have been using optical flow to develop robotic applications in areas that require object detection and tracking, image-dominant plane extraction, movement detection, robot navigation, and visual odometry. For example, robots for grasping moving objects require this capability. This technology has been recognized as useful for developing autonomous driving and flying capability.

X-ray and CT imaging

Many applications of robots require seeing through objects. For example, meat processing with robots requires robots to detect the position of bones buried in the meat. R&D effort is in progress to develop this capability cost-effectively in robots, particularly meat processing. To perform such tasks, “The first robot takes X-rays and a CT scan of the carcass, which generates a 3D model of its shape and size. Based on what the system sees in the model, another bot drives rotary knives between the ribs and cuts through the hanging carcass, using the spinal cord as a reference point.”

High precision surface measurement for construction robots

Some tasks, like laying floor tiles, require high-precision surface measurement. The application of robots is being developed for such tasks. Mobile robots with stereo cameras and a light striper for sensing show the possibility of repacking human workers in laying tiles. However, such applications require high-resolution imaging to identify tile seams and edges, assess the quality of automatic installation, and locate where the next tile should be placed.

Teaching through showing technologies for robots

Often, teaching robots to recognize objects is quite complex. If the recognition of every object requires an individual algorithm, it’s quite an expensive exercise. However, many applications like e-commerce order filling or picking objects from bins require robots to learn how to recognize different objects quickly. Technology for teaching by showing is in progress. Nonetheless, it’s at an early stage. Demonstrations include, by showing samples, robots are being taught to separate glass bottles from plastic ones or metal cans. This is a key disruptive technology for powering robotics innovation in a wide variety of applications.

High-Speed hand-eye coordination for handling flexible materials

High-speed hand-eye coordination is opening a new era of robotics. A Georgia tech start-up has patented computer vision systems to view fabric more accurately than the human eye. This technology is creating the possibility for robotic innovation to disrupt the $100 billion sewing products industry. This technology development started with a grant from DARPA in 2012. To advance the technology further, they raised an additional $4.5 million. Such high speed also high-resolution vivid image frames allow the computer to pick out individual threads in the fabric. This success appears to be sufficient to mimic the required human hand-eye coordination in handling fabric-making sewing steps human-free. Therefore, countries having large apparel outsourcing stacks should carefully monitor their progression.

Industrial Internet of things (IoTs) technologies for robots

In the era of Industry 4.0, Teleoperation and Telerobotics in the Industrial Internet of Things (IIoT) is an emerging area of Robotics. According to some market research findings, the overall Robotics Market for IIoT will be worth $45.73 Billion by 2021. Advanced IIoT systems will also utilize Digital Twin technology to enable next-generation teleoperation. IIoT applications are supported by ICT infrastructure, including broadband communications, sensors, machine-to-machine (M2M) communications, and various Internet of Things (IoT) technologies.

Teletransportation of consciousness and telepresence technologies for robots

Within the context of robotics, it’s about making humans virtually present in a robot. Work is underway that allows users to have a feeling of being present in a remote location and to pick up, hold, touch and feel an object with a distant robotic hand. It’s like teleporting someone’s consciousness into distant robots. Technologies like virtual and augmented reality (AR/VR) have been enabling us to build telepresence robots.

Initially, space explorations started using telepresence robotics technology. It’s envisioned that elderly care will likely significantly benefit from the uprising of this technology. This is one of the disruptive technologies powering robotics that has the potential to blend human capability with machines. Indeed, it’s a technology to empower humans with robots.

Collaborative robots-Cobots

Last but not least is collaborative robotics. Collaborative robots work side by side with humans in a collaborative manner. Unlike pick-and-place robots working in isolation, they share tasks with humans to accomplish higher-level goals. The continued progression of some of the technologies discussed here will increasingly enable robots to share the workspace with human coworkers safely. In the future, collaborative service robots will perform a variety of functions. In fact, collaborative industrial robots enable manufacturers to extend automation to final product assembly, finishing tasks, and quality inspection. Hence, industrial robotics companies like Fanuc has released collaborative robotic arm.

At the dawn of the fourth industrial revolution, we are apprehensive about the uprising of robotics. Particularly, we are concerned about the future of work. Despite the possibility, human-like emergence like Humanoid or movie characters like shin Godzilla is not good enough to take over the human race. Nevertheless, there has been a possibility. Particularly, the continued progression of these 13 technologies powering robotics innovation will keep expanding both the breadth and depth of applications. Some of them may emerge as a major disruptive force. For example, the rising of Sewbot with the power of high-speed hand-eye coordination for handling flexible materials may cause destruction to the global apparel sourcing model. Consequently, apparel outsourcing companies in developing countries may suffer from disruption. Therefore, it is vital to pay attention to disruptive technologies powering robotics.